Policy as Code

Welcome to my Policy as Code workshop!

The Cloud is enabling businesses to quickly adopt and use technology in ways that we’ve never imagined before. Security teams need to find ways to keep up; automation is the solution. By using Policy as Code tools we can define and enforce security guardrails. This allows developers and cloud engineers to continue shipping features while bringing the confidence to everybody that security requirements are being met.

In this lab, we get hands-on with pipelines and container runtimes, learn how to distribute the enforcement of our Governance, and centralizing our observability of the environment.

Agenda

Getting started

Important

This lab expects that you have an AWS Cloud9 environment configured. Step by step instructions to create a Cloud9 environment are available here.

Run the lab setup container.

docker run -it --network host -v /:/host jonzeolla/labs:policy-as-code

You will then need to answer the prompt, asking for your client IP.

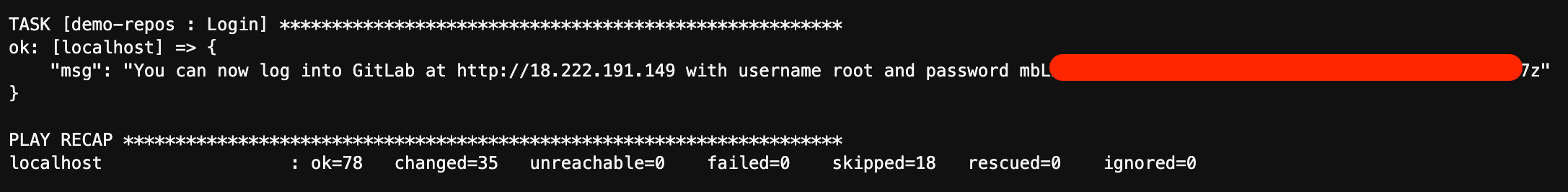

After providing a valid client IP you will see the automation begin to configure your environment. At the end of the setup you should see a message like this:

Use the provided credentials and URL to login to the GitLab instance. You’re now ready to get started on the lab!

Terminology

Governance is the process of making and enforcing decisions within an organization, and includes the process of choosing and documenting how an organization will operate.

A Policy is a statement of intent to be used as a basis for making decisions, generally adopted by members of an organization. Policies are often implemented as a set of standards, procedures, guidelines, and other blueprints for organizational activities.

Policy as Code (PaC) is the use of software and configurations to programmatically define and manage rules and conditions. Examples of popular languages used to implement Policy as Code include YAML, Rego, Go, and Python.

For more background on cloud native policy as code, see the CNCF white papers on Policy and Governance.

PaC in Pipelines

Policy as Code applies across the entire lifecycle of development, from early software design and development, building and distributing code and images, deployment of those artifacts, and finally production runtime. In this lab, we will be focusing on Policy as Code tooling that can be used in pipelines to detect and prevent mistakes from being deployed to production.

We will be using a very simple demo repository foro this lab, which is already setup in GitLab, and is also available in your Cloud9 IDE under

~/environment/policy-as-code-demo.

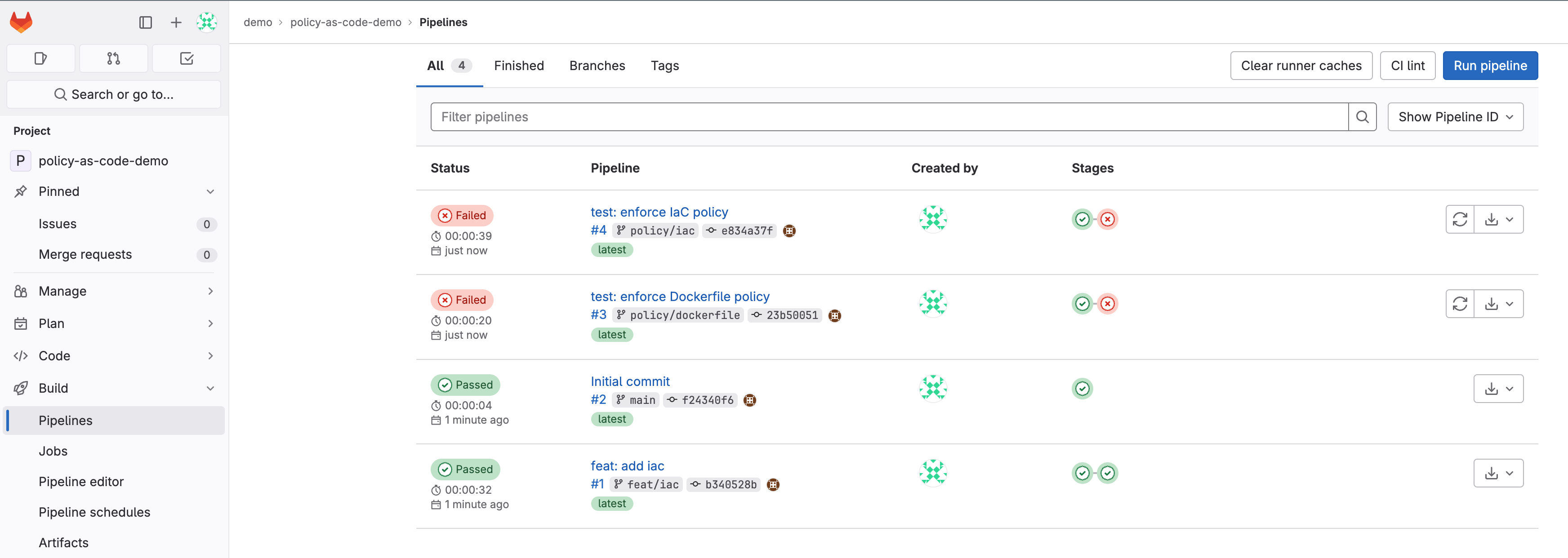

Taking a look, you should see something like this:

Can’t find it?

If you’re having a hard time finding the policy-as-code-demo repo, and the corresponding pipeline, try going to the below URL:

$ export EXTERNAL_IP="$(grep ^Host ~/.ssh/config | awk '{print $2}')"

$ echo "http://${EXTERNAL_IP}/demo/policy-as-code-demo/-/pipelines"

http://18.219.106.231/demo/policy-as-code-demo/-/pipelines

Now that we have an initial project in place, and our pipelines are working, let’s simulate a little real-world activity.

Dockerfiles

If took a look at our repository, you likely noticed a Dockerfile. Dockerfiles are a set of instructions for docker to use to build a container image. In

most cases, when you build an image, especially when on behalf of a company, you’d like to ensure that certain fields are always set. One such set of standard

fields are the OCI Image spec’s pre-defined annotation

keys.

First, let’s look at the existing Dockerfile:

This is a very straightforward image that will just print “Hello, World!” when we run it.

Learn more

To see the Dockerfile specification, see the documentation here.

In it we can see that some fields are set, including a vendor of “Jon Zeolla”. Perhaps this project was done by Jon, but on behalf of his company and as an

organization we’d like all of our developers to specify the correct organizational attribution in the vendor field. We can enforce that with Policy as Code!

First, let’s take a look at our policy definition for Dockerfiles:

Well, that was a lot. Feel free to look closer, or continue on with the lab.

Look Closer

If you look closely, you’ll see some other policies being enforced here. Sometimes we are requiring that a field be dynamic, such as the

org.opencontainers.image.revision, but even when it’s dynamic it can be a very structured way. In this case, we’re saying it can be hard coded, but if so it

needs to be either 7 or 40 characters of hex, or it can be passed in with a build argument named COMMIT_HASH.

Let’s have a look at our pipelines relating to this code. Run the following command, and then open the resulting URL in your host web browser.

$ export EXTERNAL_IP="$(grep ^Host ~/.ssh/config | awk '{print $2}')"

$ echo "http://${EXTERNAL_IP}/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=policy%2Fdockerfile"

http://1.2.3.4/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=policy2Fdockerfile

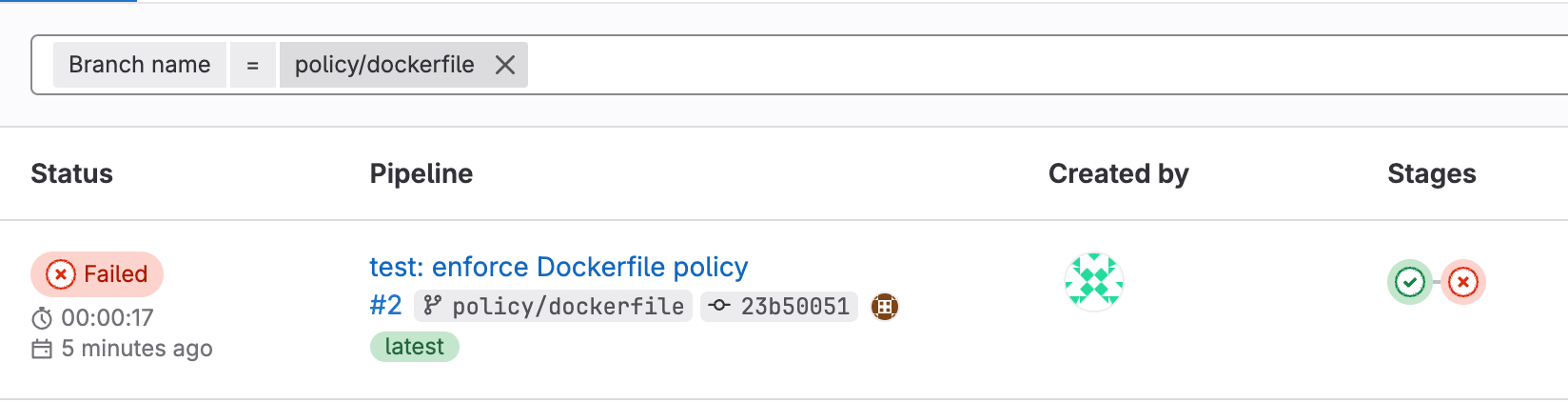

You should see something like this - the pipeline failed! But, why did it fail?

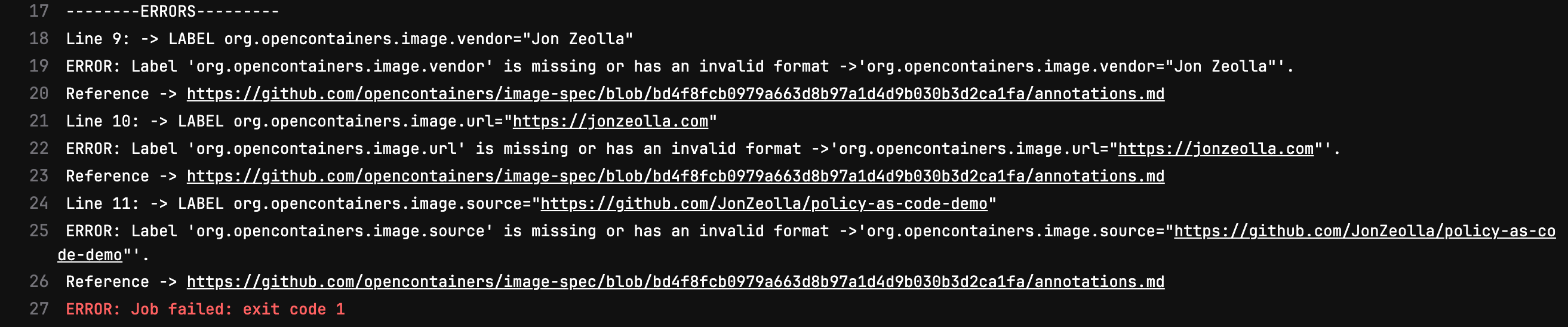

If you dig in further to the failed policy job, we can see why:

It appears that this project didn’t match our company’s policy. The vendor, url, and source specified do not contain our Example company’s allowed information, and so we fail the build to indicate to the developer(s) that this needs to be remedied.

Notice, though, that not all was wrong with this code change. What fields were set properly?

Answer

Actually, there were quite a few fields properly set:

Some of these had specific policies, such as requiring certain formats or contents, whereas others simply need to be set, but their contents are able to be any freeform text.

Infrastructure as Code

Now, let’s look at a different kind of policy. One that we’re all likely familiar with; security scans.

If we take a look at the feat/iac branch and go to the iac/ folder, we see that a developer has been hard at work adding some Infrastructure as Code to our

project. Specifically this iac/main.tf file:

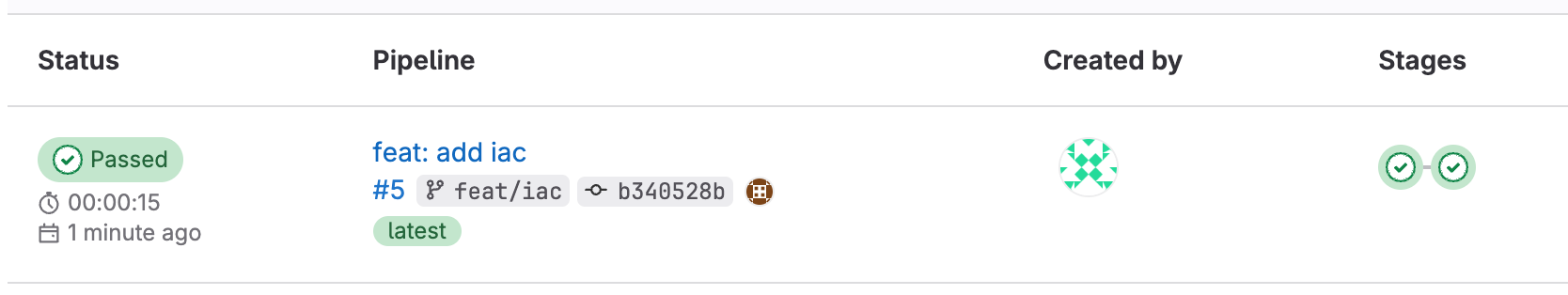

And, if we look at the related pipeline, we should also see that they succeeded in getting the coveted green checkmark:

Can’t find it?

If you’re having a hard time finding the feat/iac pipeline, run the following and then open the displayed URL in the host browser:

$ export EXTERNAL_IP="$(grep ^Host ~/.ssh/config | awk '{print $2}')"

$ echo "http://${EXTERNAL_IP}/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=feat%2Fiac"

http://1.2.3.4/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=feat2Fiac

But how do we know that EC2 server is configured securely? Well, so far we don’t.

Let’s look at how we can add some Policy scans into our pipeline, using an open source tool easy_infra.

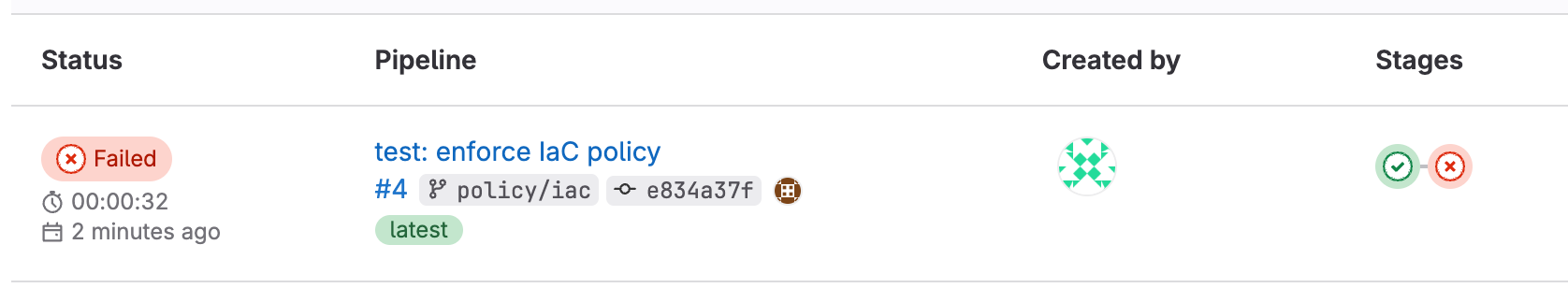

Go to the pipeline associated with the policy/iac branch now. You should see something like this:

Can’t find it?

If you’re having a hard time finding the feat/iac pipeline, run the following and then open the displayed URL in the host browser:

$ export EXTERNAL_IP="$(grep ^Host ~/.ssh/config | awk '{print $2}')"

$ echo "http://${EXTERNAL_IP}/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=policy%2Fiac"

http://1.2.3.4/demo/policy-as-code-demo/-/pipelines?page=1&scope=branches&ref=policy2Fiac

The pipeline failed, which is the expected outcome due to being non-compliant with our company’s policy. Our policy is that all EC2 instances must use encrypted EBS volumes, and the configuration that was used did not have encrypted disks (among other problems).

That was cool. All we did was change containers though. How did it add policy enforcement?

Well, easy_infra has what we call an opinionated runtime. It dynamically adding the right policy and security scanning tools in when certain types of commands

are run inside of the container, such as terraform in our example, but it also supports tools like ansible and aws cloudformation.

Additional Information

OSCAL

So far, we’ve seen some Policy as Code assessment outputs in various formats. What if we wanted to centralize, standardize, and globally assess these policies?

NIST’s Open Security Controls Assessment Language is currently the most well-designed and adopted framework for articulating the state of security controls and security assessments. While it is still in its infancy, it has been in development for many years, having its first release in mid-2019.

The assessment layer is planned to be expanded in OSCAL 2.0 to support the automation of assessment activities.

In this video (skip to about 4:40 for the first mention), David Waltermire discusses how OSCAL is optimized for a controls-based risk assessment approach.

Supports YAML, JSON, and XML-based outputs via their Metaschema project, although some projects have been adopting graph-based views of OSCAL data to better represent relationships, such as using Neo4j.

Pydantic data models have also been developed to support the validation of OSCAL data as it passes between systems, and is particularly valuable in supporting API-driven development by integrated into projects such as FastAPI.

Numerous projects adopting OSCAL, including FedRAMP Automation from the GSA,

12 Essential Requirements for Policy Enforcement and Governance with OSCAL by Robert Ficcaglia at CloudNativeSecurityCon 2023.

Other interesting projects include Lula which ingests OSCAL and builds on top of Kyverno to automate control validation at runtime.

And if you’re interested in getting your hands dirty, Go developers will probably want to look at Defense Unicorn’s go-oscal or GoComply’s OSCALKit, and Python developers could leverage the models in IBM’s compliance-trestle.

Another interesting project uses OSCAL models and generates diagrams to help visualize your security controls.

Runtime Policy as Code tools

Other popular Runtime Policy as Code tools include OPA/Gatekeeper and their flexbile, multi-purpose Rego policy language, as well as Kyverno which is a Kubernetes-only policy engine to support validation and enforcement of policy in a kubernetes cluster.

Falco also support powerful policy monitoring capabilities focused on container and system runtimes, via their eBPF support.

Congratulations 🎉

Great work! You’ve finished the lab.

If you’re interested in more work like this, please stay tuned. I will be updating this lab in the coming weeks, as well as writing and running additional labs soon.

Have any ideas or feedback on this lab? Connect with me on LinkedIn and send me a message.

If you’d like more content like this, check out SANS SEC540 class for 5 full days of Cloud Security and DevSecOps training.

Cleanup

Don’t forget to clean up your Cloud9 environment! Deleting the environment will terminate the EC2 instance as well.

Appendix: Common Issues

Finding your root password

grep 'root' ~/logs/entrypoint.log